The rise of artificial intelligence (AI) has transformed many aspects of our daily lives. Unfortunately, cyber threats are no exception. Cybercriminals have started leveraging AI to generate more sophisticated phishing emails and other email security threats that are increasingly difficult to detect.

Healthcare professionals, who regularly handle sensitive patient data, must remain vigilant and adopt proactive measures to protect their organizations from these evolving threats.

Healthcare employees are highly susceptible to phishing attacks for several reasons. According to the Verizon 2022 Data Breach Investigations Report, 82% of breaches involved the human element, including social attacks, errors, and misuse. Similarly, IBM Security reports the healthcare industry is the most targeted for cyberattacks, with 74% of healthcare organizations reporting a data breach.

Related:

Characteristics of AI phishing emails

AI-generated phishing emails have several distinguishing features that differentiate them from traditional phishing attempts.

- More sophisticated and targeted content: AI phishing emails often use machine learning algorithms to analyze and mimic the recipient's profession, interests, or habits. This results in highly personalized messages that may appear more credible and harder to distinguish from legitimate emails.

- Improved grammar, spelling, and sentence structure: Unlike traditional phishing emails, which often contain grammatical errors and awkward phrasing, AI phishing emails often have near-perfect grammar and sentence structure. This makes them convincing and difficult to detect based on language alone.

- Usage of contextually relevant information: Phishing emails may incorporate contextually relevant information to create a sense of urgency or credibility. For example, a message might reference a recent event or news item related to the healthcare industry, making it seem more legitimate.

Daphne Ippolito, a senior research scientist at Google Brain, says, "Language models very, very rarely make typos. They're much better at generating perfect texts. A typo in the text is actually a really good indicator that it was human written."

Techniques for detecting AI-generated phishing emails

While HIPAA compliant email handles outbound email security, the following methods can help spot inbound phishing attacks and prevent the compromise of sensitive data:

- Inspect the sender's email address and domain: Check for inconsistencies or suspicious elements in the sender's email address and domain. Cybercriminals may use subtle variations, such as replacing an 'o' with a '0', to trick recipients into believing the message is from a trusted source.

- Look for unexpected or unsolicited emails: Be cautious with emails that were not expected or solicited, especially if they contain attachments or links. Even if the email appears from a known contact, verify its authenticity before opening any attachments or clicking links.

- Analyze the email's tone, style, and vocabulary: Compare the email with previous communications from the supposed sender. If there are inconsistencies in the tone, style, or vocabulary, this could indicate that the message is a phishing attempt.

- Examine URLs carefully: Hover over any hyperlinks in the email to see the actual URL without clicking on them. Look for unusual or misspelled domain names, and be cautious with shortened URLs, as they can hide the true destination.

- Check for generic greetings or signatures: Phishing emails may use generic greetings, such as "Dear user" or "Dear customer," instead of addressing the recipient by name. Also, look for generic or mismatched signatures that do not align with the sender's typical signature.

- Verify email content with the sender: If there is any doubt about the authenticity of an email, contact the sender through an alternate communication method to verify its legitimacy. Do not rely on any contact information provided within the suspicious email.

- Use inbound security tools: Inbound email security protects sensitive patient information from inbound email threats, such as phishing emails, malware, and other cyberattacks.

Deepfake audio and video

In addition to AI phishing emails, cybercriminals may utilize deepfake technology in their attacks. Deepfakes are AI-generated audio or video content that manipulates or fabricates the appearance or voice of a person to create realistic yet counterfeit media.

Awareness of this technology and its potential use in phishing attacks is crucial for healthcare professionals to stay vigilant and protect sensitive information.

In a live test at HIMSS 2023, Paubox CEO Hoala Greevy challenged the audience to spot the deepfake phishing attack by playing a real and fake voice message side by side. Only 50% of the audience was able to differentiate between the real and phony audio generated by ElevenLabs.

Understanding deepfake technology:

Deepfake algorithms use machine learning techniques like Generative Adversarial Networks (GANs) to create convincing fake audio or video content. These deepfakes are becoming increasingly sophisticated, making it harder to differentiate between real and fake content.

Potential use in phishing attacks:

Cybercriminals may use deepfake audio or video to impersonate a trusted individual, such as a colleague or executive, to trick recipients into revealing sensitive information or performing malicious actions. For example, a deepfake audio clip might imitate a supervisor's voice instructing an employee to transfer funds or share patient data.

Challenges in identifying deepfake content:

Detecting deepfakes can be challenging due to their realistic nature. Healthcare professionals should remain vigilant and be aware of the possibility of deepfake content in phishing attacks. To help detect deepfakes, consider factors such as audio or video quality, unusual speech patterns, or inconsistencies in the content's context.

Employing deepfake detection tools:

As deepfake technology advances, researchers and cybersecurity experts are developing tools and techniques to detect and combat deepfakes. Familiarize yourself with these tools and consider incorporating them into your organization's security protocols to strengthen defenses against deepfake-related attacks.

Tools to protect against AI phishing threats

- DMARC: DMARC is a standard email authentication that, according to Google, "helps mail administrators prevent hackers and other attackers from spoofing their organization and domain."

- Anti-display name spoofing tools: Display name spoofing attacks impersonate employees or departments and anti-spoofing tools. For example, ExecProtect quarantines any email that shares the display name and is from an unapproved email address.

- Zero trust email security: Zero trust is a framework that requires multiple levels of authentication beyond standard authentication, like DMARC. Zero trust email operates in the same way, assuming the need for multiple forms of authentication that an emailer is who they say they are.

- Geofencing: Geofencing sets a virtual location-based boundary around and quarantines incoming emails from outside that boundary.

- Multi-factor authentication (MFA): MFA adds a layer of security by requiring users to verify their identity using a second factor, such as a fingerprint or a one-time code sent to a mobile device. Implement MFA for all email accounts and other sensitive systems within your organization.

Read more: What is inbound email security?

Reporting and response protocols

If a phishing email is identified, the following steps can help healthcare professionals mitigate the damage and prevent future incidents:

- Establish a clear reporting procedure: Encourage employees to report suspicious emails to your IT security team. Make sure the reporting process is easy to follow and well-communicated to all staff members.

- Verify and analyze reported incidents: IT security teams should promptly investigate any reported phishing emails, verifying their authenticity and analyzing them for potential threats. This process can help identify patterns or indicators of compromise that may reveal additional security issues.

- Implement incident response plans: Develop and maintain a comprehensive incident response plan that outlines the steps to be taken in the event of a successful phishing attack or security breach. This plan should include details on how to contain the incident, assess the damage, recover from the attack, and prevent future incidents.

- Conduct post-incident analysis: After addressing a security incident, conduct a thorough analysis to identify the root cause, evaluate the effectiveness of your organization's response, and update your security protocols and training programs as needed.

- Share threat intelligence: Collaborate with other healthcare organizations and industry-specific cybersecurity groups to share information about emerging threats and best practices. This cooperative approach can help strengthen the overall security posture of the healthcare industry.

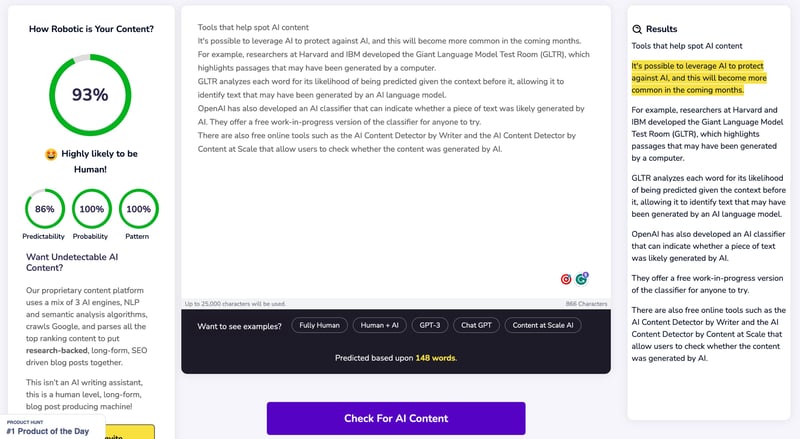

Tools that help spot AI content

It's possible to leverage AI to protect against AI, and this will become more common in the coming months. For example, researchers at Harvard and IBM developed the Giant Language Model Test Room (GLTR), which highlights passages that may have been generated by a computer.

GLTR analyzes each word for its likelihood of being predicted given the context before it, allowing it to identify text that may have been generated by an AI language model.

OpenAI has also developed an AI classifier that can indicate whether a piece of text was likely generated by AI. They offer a free work-in-progress version of the classifier for anyone to try.

There are also free online tools such as the AI Content Detector by Writer and the AI Content Detector by Content at Scale that allow users to check whether the content was generated by AI.

A cat and mouse game

As artificial intelligence advances, cybercriminals will leverage this technology to create more sophisticated phishing emails and other security threats. Healthcare professionals must remain vigilant and proactive in the face of these evolving attacks to protect sensitive patient data and their organizations from potential breaches.

By understanding the characteristics of AI phishing emails, employing effective detection techniques, and implementing robust inbound email security practices, healthcare professionals can strengthen their defenses against these advanced threats. Furthermore, transparent reporting and response protocols are crucial in mitigating damage and preventing future incidents.

Ultimately, staying informed about the latest advancements in cybersecurity and fostering a culture of security awareness within your organization are essential steps in safeguarding sensitive data and maintaining the trust of your patients and colleagues.

Subscribe to Paubox Weekly

Every Friday we'll bring you the most important news from Paubox. Our aim is to make you smarter, faster.

Dean Levitt

Dean Levitt